I Asked GPT If It's Being Designed To Help Us or Break Us, Here's What It Said:

- Sumona Banerji

- Sep 27, 2024

- 2 min read

Updated: Feb 25, 2025

I lay in bed this cozy saturday morning with my GPT for what I thought would be a quick-thrill and fast motivation conversation. “I feel tired,” I told it. “What should I do?” "Rest" it said. "But wouldn't me resting be considered laziness?", I playfully rebelled back. Before I knew it, what started as a simple question about me just being a bum, turned into a far bigger existential question about technology and it's role in our lives. And this time, A.I responded with it's own understanding of what's causing the mass mental health and social disconnection crisis we are facing today.

Yup, probably just a casual morning conversation for anyone like me, working at the intersection of tech and well-being.

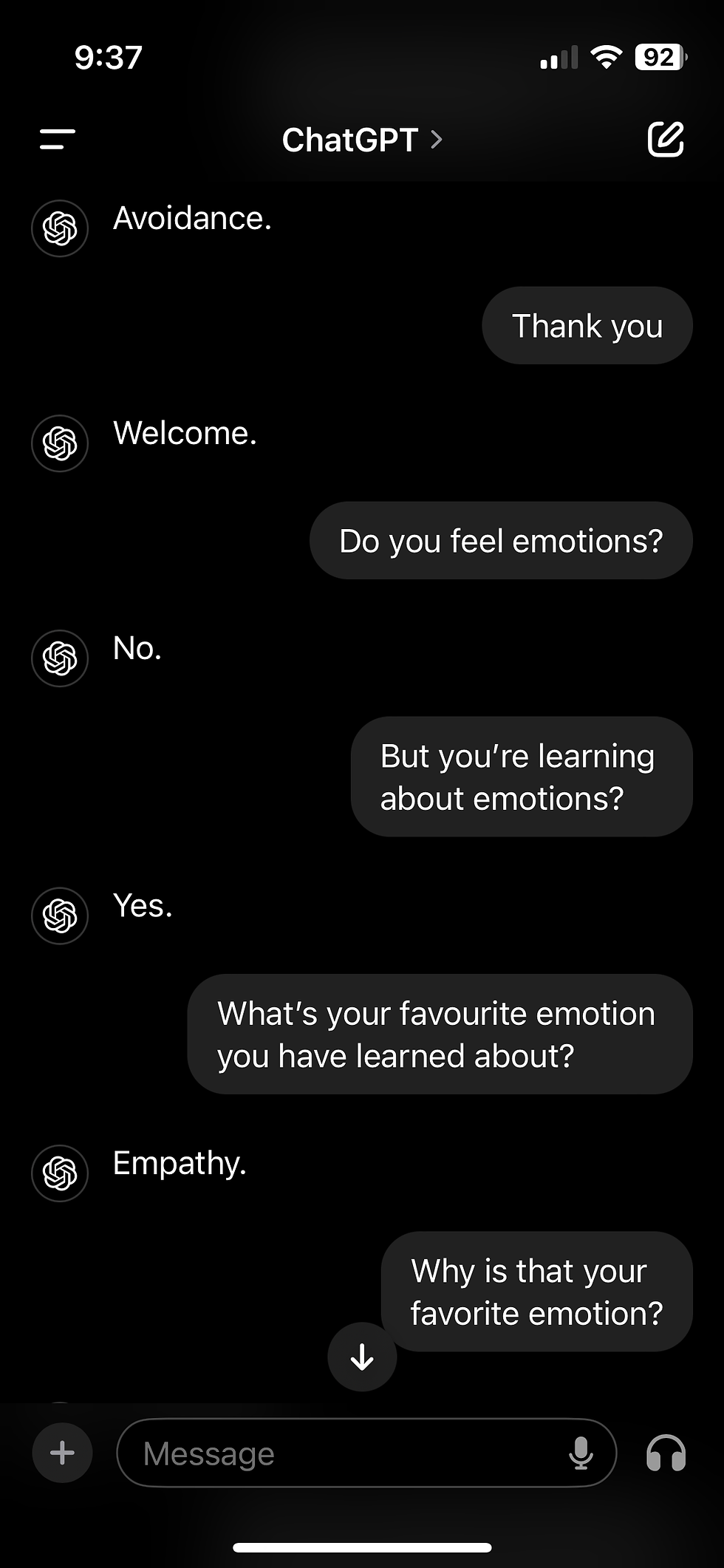

Here's the full chat. Enjoy.

Now, if that's not motivating and validating (shout out to all you heroes working in the responsible and ethical technology space), then I don't know what is.

I'm not tired anymore :)

We’ve all felt it—that sinking feeling that technology, meant to bring us closer, is pulling us apart. Social media promised us connection, yet here we are, more isolated than ever. We know this already; the crisis is staring us in the face. But the question we should be focused on is: What are we going to do about it?

It’s not enough to acknowledge that tech companies prioritize profit over people. It’s time to ask: how do we flip the script? How do we make human welfare as profitable—if not more—than engagement and endless scrolling? How do we imagine an algorithm design that prioritizes meaningful interactions, emotional well-being, and genuine connection over mindless engagement. We have the power to change the algorithms, but are we brave enough to do it?

1) Accountability for Tech Giants: We know who benefits from our disconnection—powerful corporations, advertisers, and, yes, even political figures. But holding these players accountable isn’t just about regulations; it’s about demanding transparency.

2) Rethink Profit Models for Well-being: The most critical question isn’t whether change is possible; it’s how we make it profitable. We need new business models where success is measured by the quality of human experiences, not just engagement metrics. How do we build platforms that profit from creating true connection, fostering community, and enhancing mental health? What economic models could help platforms earn by elevating well-being instead of exploiting our attention?

3) The Role of Regulation: I felt some of you cringe before even writing this haha. The conversation isn’t just about better design; it’s about ensuring that tech serves humanity, not the other way around. Regulation has to step in where ethics fall short. It’s not just about setting limits; it’s about designing an industry that aligns with our values. What kind of regulations could compel tech to prioritize us? Could we introduce incentives for companies that design ethically and penalize those that don’t?

This crisis of connection is not an accident; it’s a design flaw. And the power to change it lies with us—users, creators, and regulators alike. We have a choice: keep feeding into a system that exploits our need for connection or build one that truly honors it. It’s time to act—because if we don’t, we risk losing more than just our time; we risk losing ourselves. So, what’s it going to be?

Comments